Projects

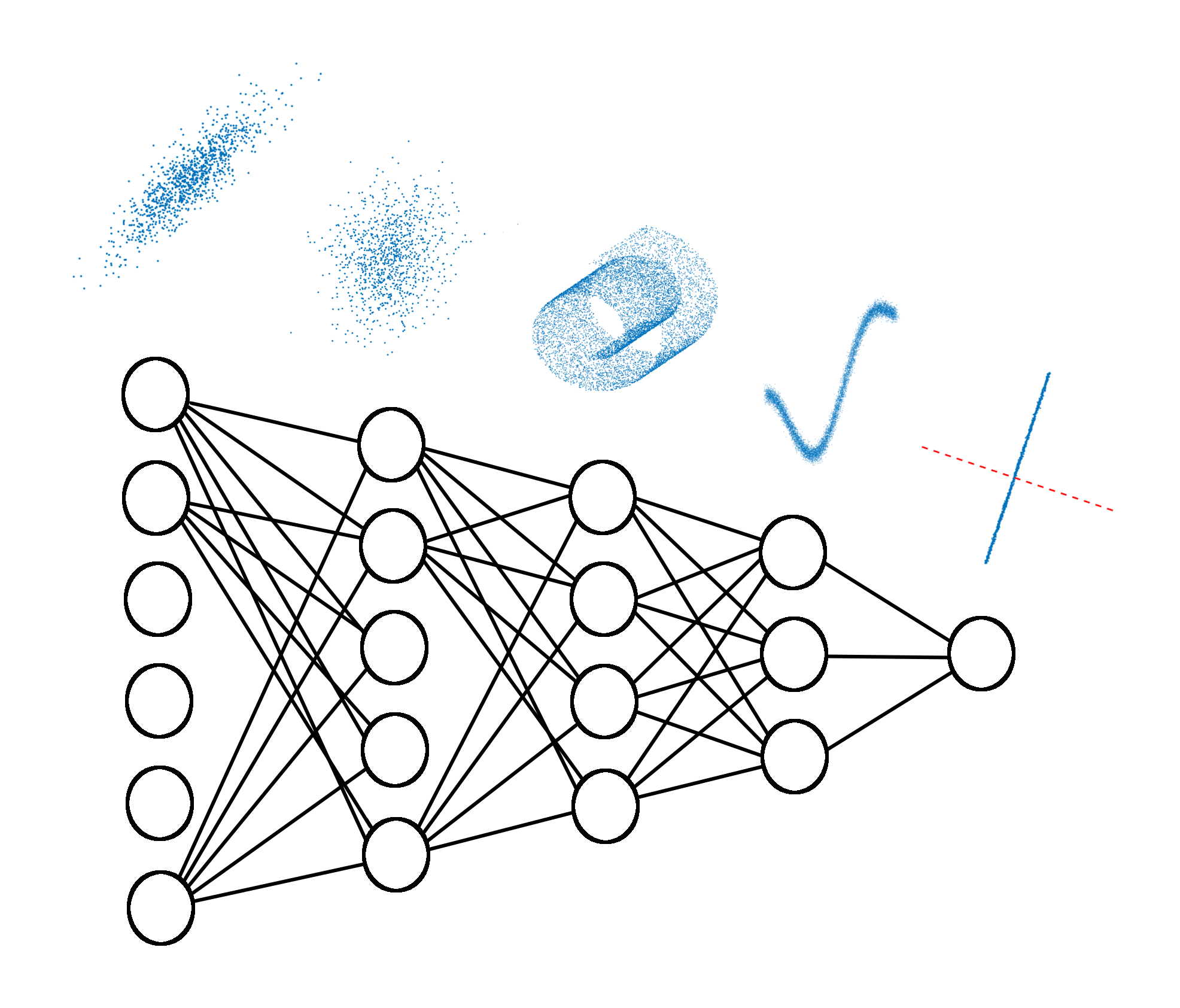

Intrinsic Dimension of Data Representations in Deep Networks

Deep neural networks transform their inputs across multiple layers.

In this Project we studied the intrinsic dimensionality (ID) of data-representations,

i.e. the minimal number of parameters needed to describe a representation.

We estimate ID in multiple CNNs with the TWO-NN algorithm

and find that

- the ID is much lower than the number of units (ED, embedding dimensions)

- the ID along the layers has a typical “hunchback” shape

- in the last hidden layer the ID strongly predicts performance

- even in the last hidden layer, representations are curved.

Look inside the Repository for an outline of our work, extra materials (long video, poster) and the code.

Full details are in our NeurIPS 2019 paper

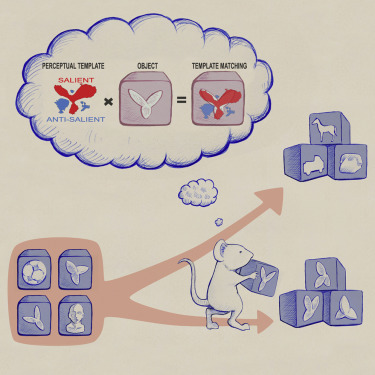

Accuracy of Rats in Discriminating Visual Objects Is Explained by the Complexity of Their Perceptual Strategy

Credits to Marco Gigante for his beautiful drawing.

In this Project we studied the perceptual strategies of rats involved in visual discrimination tasks.

With the aid of machine learning techniques based on logistic regression and classification images we found that:

- the ability of rats to discriminate visual objects varies greatly across subjects

- such variability is accounted for by the diversity of rat perceptual strategies

- animals building richer perceptual templates achieve higher accuracy

- perceptual strategies remain largely invariant across object transformations

For this work, we had the honour to receive a referral by Philippe G.Schyns in Current Biology.

Full details are in the paper.